- Academic Signal

- Posts

- The AI Edge: Lessons from Hedge Fund Adoption

The AI Edge: Lessons from Hedge Fund Adoption

From earnings call analysis to compliance stress tests, AI is reshaping hedge funds. But the real edge comes from building systems, not plugging tools.

Welcome to Academic Signal, where we decode finance research into plain English to surface ideas that matter to professional investors.

How are hedge funds using AI to generate alpha?

Comprehensive review of 50 sources from 2022 to 2025 reveals systematic advantages for AI-adopting hedge funds

A Comprehensive Review of Generative AI Adoption in Hedge Funds: Trends, Use Cases, and Challenges (June 13, 2025) Link to paper

Remember when we were all skeptical about whether AI would actually move the needle in hedge funds? Well, turns out we were asking the wrong question. It's not whether AI works – it's who's doing it right.

Since ChatGPT launched in 2022, ~90% of hedge fund managers now use AI tools. AI in hedge funds is now seen as systematic advantage… when done right. Leveraging AI isn’t about plugging point solutions or using ChatGPT. It’s about rethinking how you process information (and this isn’t just for hedge funds – applies just as well to PE and VC).

Where do most funds fall short? Feature engineering: turning raw data into contextualized data so I can derive useful insights. Maybe that’s why last time you used AI you thought “this doesn’t work for me” 😉

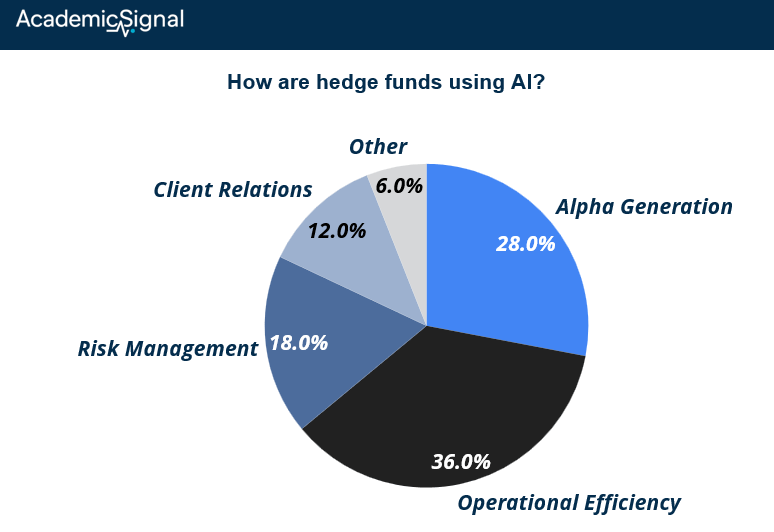

How are hedge funds using AI?

Hedge funds are using AI the most for processing earnings calls, news, SEC filings, and alternative data. The research breaks down what's actually working into four buckets:

Alpha Generation (28% of use cases) This is the sexy stuff everyone talks about. Think ChatGPT analyzing earnings calls in seconds, satellite imagery tracking supply chains, or processing regulatory filings faster than any human team could.

AI can go through a 10-K, analyze all its footnotes, and compare the minutiae with past 10-Ks to identify what language is getting tweaked by management and what that might mean. Flagging stuff that analysts would never have time to catch.

Operational Efficiency (36% - the biggest slice) Honestly, this might be more important than the alpha generation. We're talking about cutting DDQ response times from 40 hours to 4 hours. Automating client reports. Having AI handle the grunt work so your best people can focus on the stuff that actually matters.

Risk Management (18%) AI is getting scary good at stress testing portfolios and spotting compliance issues before they become problems. It can generate synthetic market scenarios and monitor millions of transactions for red flags. Your compliance team probably loves this.

Client Relations (12%) From automated RFP responses to personalized investor updates, AI is handling a lot of the relationship management workload. It's not replacing the relationship – it's making it more efficient.

The secret sauce: architecture actually matters

The funds that outperform aren't just throwing raw data to AI models or just applying ChatGPT. They're building actual systems.

The winning approach looks like this:

Data layer that ingests both structured (market data) and unstructured data (earnings calls and news)

Feature engineering that translates raw data into contextualized data that I can turn into useful insights

Model layer running multiple AI models for different tasks

Application layer that delivers insights to PMs and risk teams

Governance layer keeping everything compliant and explainable

The funds trying to bolt AI onto their existing systems? They're missing the real transformation. This isn't about adding a tool – it's about rethinking how you process information entirely.

Where investors often fall short when applying AI (feature engineering)

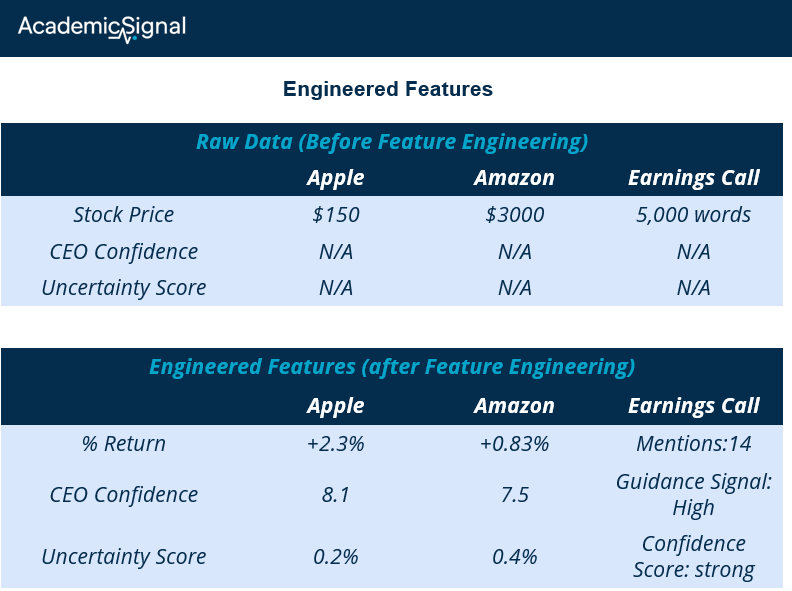

The most mis-understood aspect of properly applying AI may be feature engineering.

Think of feature engineering like this: imagine giving someone a pile of ingredients and asking them to cook dinner without telling them what dish to make. That's essentially what happens when you feed raw data to AI models. The AI sees Apple at $150 and Amazon at $3,000 and thinks Amazon is "20x more important" just because of the price. It completely misses the context that matters.

Feature engineering is the translation layer that turns meaningless numbers into insights AI can actually use. Instead of raw prices, you feed the model percentage returns, volatility-adjusted scores, and distance from 52-week highs. Suddenly the AI can see that a 2% move in a biotech penny stock is normal while a 2% move in Apple might be massive. It's like giving the AI reading glasses: same data, but now it can actually see the patterns that matter.

Here's an example: raw earnings call transcripts are just 5,000 words of text to an AI model. But feature engineering extracts CEO confidence scores, forward guidance mentions, and uncertainty word frequency. Now the AI can spot that "when confidence >7.8 AND guidance mentions >12 AND uncertainty <0.3%, stocks outperform 73% of the time."

The human analyst's domain knowledge gets baked into features the AI can actually turn into signals that the analyst would miss. This is where Human + AI >> than either on their own.

This is why the best hedge funds aren't just hiring AI engineers – they're building feature engineering teams that combine market expertise with technical skills.

The models might be commoditized, but knowing which features to extract from earnings calls, satellite imagery, and supply chain data? That's where the real alpha generation happens. It's not about having better AI – it's about teaching your AI to see what actually matters.

The picture isn’t rosy all the way though…

First, there's this "herding risk" that should keep you up at night. If everyone's using similar AI models on similar data sets, we could see market volatility spike by 12-15%. Think about 2007 when everyone had similar risk models – except now it's happening in real time.

Then there's the explainability problem. Try explaining to a client why your AI model recommended a trade. What’s really scary is when you ask a model a 2nd time and it give you a different response! "The black box said so" doesn't exactly inspire confidence. And good luck explaining that to regulators.

Plus, the implementation costs are real. Smaller funds are getting priced out, which might actually be creating opportunities for the shops that can afford to do this right. Or maybe it’s time for aggressive consolidation in the industry? 🤯

AI is revolutionizing investing

By 2027, the paper’s author predicts that 95% of funds will be using AI tools. But the early adopters are building such big leads that "me too" implementations might not cut it.

AI talent costs are going up 25% annually. 40% of analyst roles are being transformed into AI oversight positions. This isn't just technology adoption – it's organizational transformation.

The smart money is already thinking about 2025-2030 trends: specialized AI hedge funds, synthetic data growing 300% annually, quantum computing integration. If you're not thinking about this stuff now, you're already behind.

What you should be asking yourself NOW

The performance gap between AI adopters and everyone else seems to be widening every quarter. If you don’t want to fall behind, here are the questions that actually matter:

Do you have a real AI architecture, or just point solutions? Are you building systems or just using ChatGPT?

What's your data edge? Everyone has Bloomberg terminals. What unique data are you processing?

How do you handle the black box problem? Can you explain your models when things go wrong?

Are you building internal capabilities, or outsourcing everything? This says a lot about your long-term thinking.

Disclaimer

Academic Signal is for educational purposes only and isn't investment advice – always consult qualified professionals before making financial decisions. While we work hard to accurately interpret research, our analysis represents our own perspective, and academic studies can be interpreted differently (or even flawed in certain areas). Think of this as sharing interesting research with a colleague, not professional guidance.